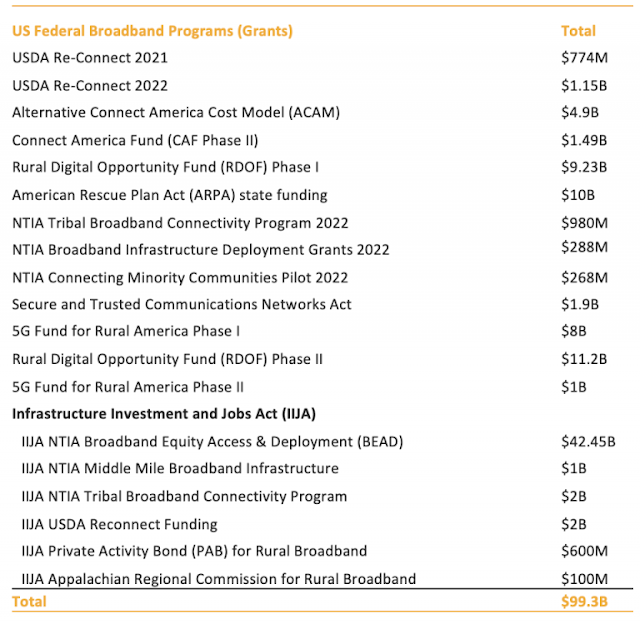

In this installment, we’re going to focus on public cloud optimization, which differs slightly from its on-premises counterpart. In an on-premises data center, infrastructure is generally finite in scale and fixed in cost. By the time a new physical server hits the floor, the capital has been spent and has taken a hit on your business’s bottom line. In this context, on-premises optimization means maximizing utilization of the sunk cost of capital infrastructure (while still assuring performance of the workload, of course).

In the public cloud, however, infrastructure is effectively infinite. Resources are generally far more elastic and often paid for out of an operating expenditure budget rather than a capital budget. In this case, cloud optimization means minimizing cloud spend, and the burden of maximizing hardware utilization falls to the cloud provider. Minimizing cloud spend proves to be a daunting exercise for cloud administrators given the public cloud’s vast array of instance sizes and types (over 400 in Amazon Web Services alone, as shown in Figure 1: Amazon Web Services instance types, all with slightly different resource profiles and costs, and with new options and pricing changing almost daily. At scale, selecting the ideal instance type, size, term, etc. for every workload at every moment in order to assure performance and minimize spend is arguably an impossible task for a human, but is an ideal use case for the IWO decision engine.

Figure 1: Amazon Web Services instance types

Taking action in the public cloud

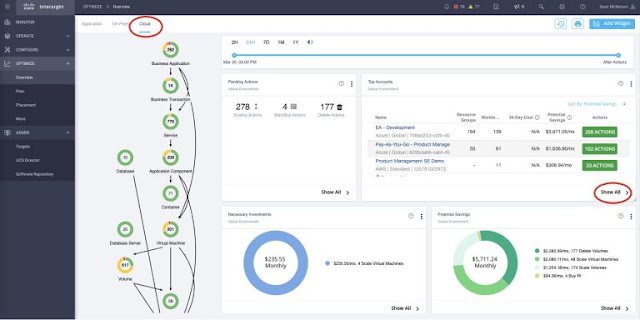

So let’s take a look at the types of real-time actions IWO offers for public cloud optimization. In Figure 2, starting on the Cloud tab of the main Supply Chain screen, we see a number of widgets on the right with actionable information – Pending Actions, Top Accounts, Necessary Investments, Potential Savings, etc.

Figure 2: Supply Chain view of the Public Cloud and Pending Actions widget

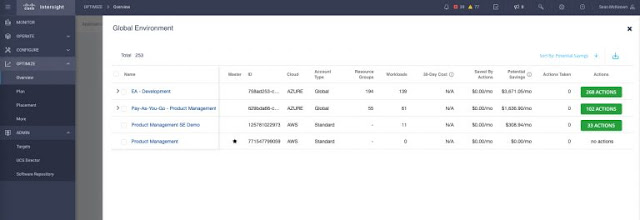

Clicking on “Show All” in the Top Accounts widget, we see a list of all our public cloud accounts and subscriptions in a hierarchical table, as shown in Figure 3.

Figure 3: Public cloud account details table

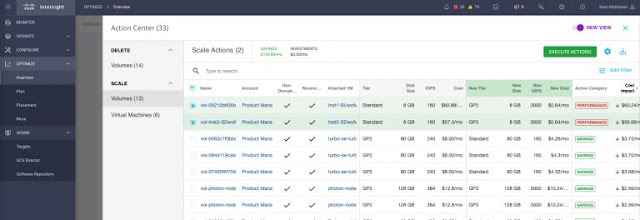

Clicking on one of the green action buttons on the right, we see the current pending actions for a specific account, as shown in Figure 4. There we see a number of storage volume actions highlighted, some relating to performance needs, others to recoup savings due to over-provisioning (i.e. you can move to a cheaper tier of storage and still assure performance).

Figure 4: Action Center table with details on specific pending storage actions for a given account

In this specific example, a keen-eyed reader might notice something curious about the two performance actions at the top of the list: even though the actions are being taken to provide more IOPS (moving from 160 to 3000 IOPS) to assure performance, the cost impact is actually lower. That’s right – these actions are providing more performance for less cost! While maybe not entirely common, this example shows just how quirky the plethora of options are in the public cloud, and how difficult it can be for humans to avoid leaving money on the table. (This example is also non-disruptive and reversible, as noted in the table, with the ability to execute immediately with the click of a button. (What’s not to like?)

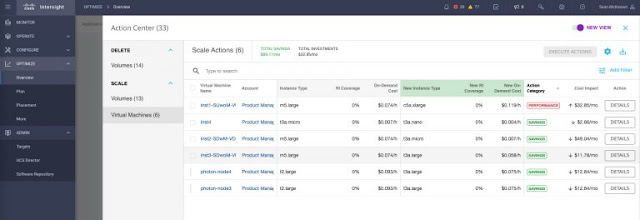

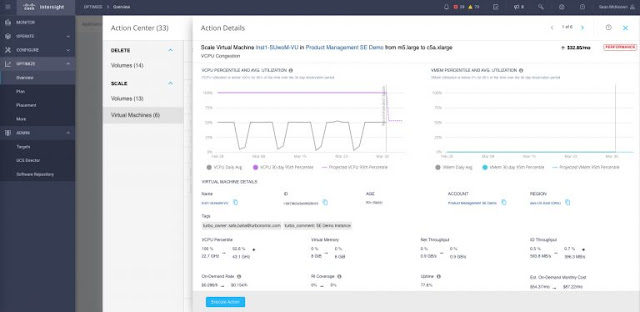

Clicking on the Scale Virtual Machines tab in the Action Center list, we see the current pending actions to rightsize our VMs, as shown in Figure 5.

Figure 5: Action Center table with details on specific pending VM actions for a given account

Clicking on the details button in the first row takes us to the Action Details window providing us clear data behind the decision, as well as the expected outcome of the action from both a performance and a cost perspective, as shown in Figure 6. We can also conveniently run the action with a single button click, right from the dashboard interface.

Figure 6: Action Details for a specific VM scaling action

This detailed information is available for every action IWO recommends, across all workloads in all cloud accounts. Choosing the right action, with even just a handful of workloads, is difficult for a human. Getting it right across many tens, hundreds, or thousands of workloads spread across multiple accounts in multiple clouds in real time is a problem that IWO is uniquely positioned to solve.

Reserved instances: rent or lease?

To further complicate matters for a cloud administrator, you have the option of consuming instances in an on-demand fashion — i.e., pay as you use — or via Reserved Instances (RIs) which you pay for in advance for a fixed term (usually a year or more). RIs can be incredibly attractive as they are typically heavily discounted compared to their on-demand counterparts, but they are not without their pitfalls.

The fundamental challenge of consuming RIs is that you will pay for the RI whether you use it or not. In this respect, RIs become more like the sunk cost of a physical server on-premises than the intermittent cost of an on-demand cloud instance. One can think of on-demand instances as being well-suited for temporary or highly variable workloads, analogous to a car-less city dweller renting a car: usually cost-effective for an occasional weekend trip, but cost-prohibitive for long-term use. RIs are akin to leasing a car: often the right economic choice for longer-term, more predictable usage patterns (say, commuting an hour to work each day).

When faced with a myriad of instance options and terms, you are generally forced down one of two paths: 1) only purchase RIs for workloads that are deemed static and consume on-demand instances for everything else (hoping, of course, that static workloads really do remain that way); or 2) pick a handful of RI instance types — e.g., small, medium, and large — and shoehorn all workloads, static or variable, into the closest fit. Both methods leave a lot to be desired.

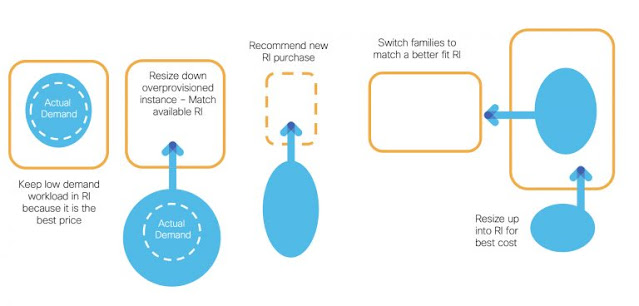

In the first case, it’s not at all uncommon for static workloads to have their demand change over time as app use grows or new functionality comes online. In these cases, the workload will need to be relocated to a new instance type, and the administrator will have an empty hole to fill in the form of the old, already paid-for RI (see examples in Figure 7).

Figure 7: Changes in workload demand can trigger numerous cascading decisions for RI consumption

What should be done with that hole? What’s the best workload to move into it? And if that workload is coming from its own RI, the problem simply cascades downstream. The unpredictability of such headaches often negates the potential cost savings of RIs.

In the second scenario, limiting the RI choices almost by definition means mismatching workloads to instance types, negatively affecting either workload performance or cost savings, or both. In either case, human beings, even with complicated spreadsheets and scripts, will invariably get the answer wrong because the scale of the problem is too large and everything keeps changing, all the time, so the analysis done last week is likely to be invalid this week.

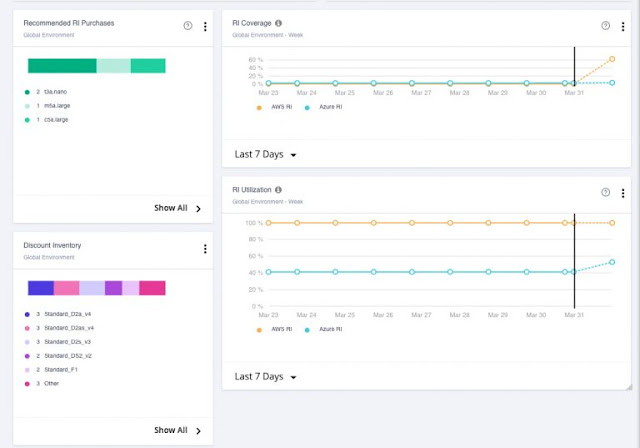

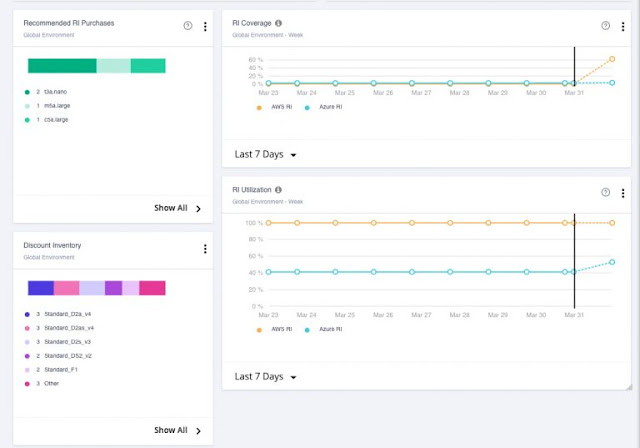

Thankfully, IWO was developed to understand both on-demand instances and RIs in detail through native API target integrations with popular public cloud providers like AWS and Azure. IWO capabilities are constantly receiving real-time data on consumption, pricing, and instance options directly from the cloud providers, and combining such data with the knowledge of applicable customer-specific pricing and enterprise agreements to determine the best actions available at any given point in time.

Figure 8: Detailed inventory information and purchase actions for RIs

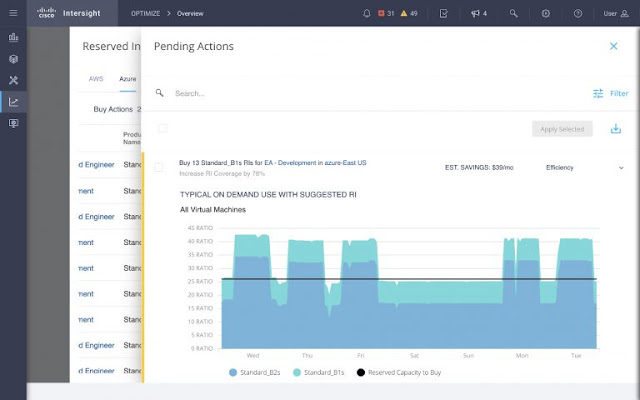

Not only does IWO technology understand current and historical workload requirements and an organization’s current RI inventory (see above), but it also has the capability to intelligently recommend the optimal consumption of existing RI inventory and additional RI purchases to minimize future spending. In Figure 9, we have a Pending Action to buy 13 RIs which would take the RI coverage up to the horizontal black line in the chart. Most of the area under the blue and turquoise curves, representing the workload resource requirements, would be covered by RIs – everything below the black line. The peaks above the black line would be covered by on-demand purchases. While you could purchase enough RIs to cover all the area under the curve, this is not the most cost-effective option to meet workload demand.

Figure 9: Details supporting a specific RI purchase action

Continuing with our car analogy, in addition to knowing whether it’s better to rent or lease a car in any given circumstance, IWO can even suggest a car lease (RI purchase) that can be used as a vehicle for ride-sharing. IWO can fluidly move on-demand workloads in and out of a given RI to achieve the lowest possible cost while still assuring performance.

In short, IWO has the ability to understand the optimal combination of RI purchases and on-demand spending across your entire public cloud estate, in real-time.

Cloud Migration Planning

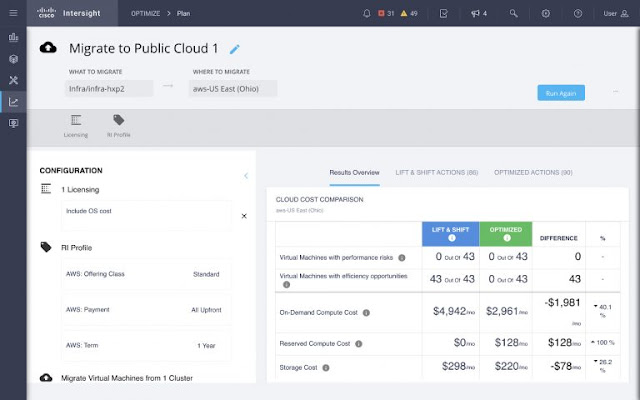

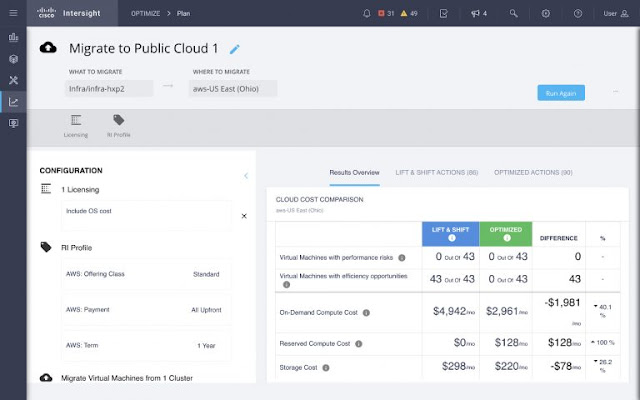

Finally, because IWO uses the same underlying decision engine for both the on-premises and public cloud environments, it can bridge the gap between them. The process of migrating VM workloads from on-prem to the public cloud can be simulated in IWO’s planning module and will allow the selection of specific VMs or VM groups to generate the optimal purchase actions required to run them, as shown in Figure 10.

Figure 10: On-prem to public cloud workload migration planning results

These plan results offer two options: Lift & Shift and Optimized, depicted in the blue and green columns, respectively. Lift & Shift shows the recommended instances to buy, and their costs, assuming no changes to the size of the existing VMs. Optimized allows for VM right-sizing in the process of moving to the cloud, which often results in a lower overall cost if current VMs are oversized relative to their workload needs. Software licensing (e.g., bring- your-own vs. buy from the cloud) and RI profile customizations are also available to further fine-tune the plan results.

Have your cake and eat it too

IWO has the unique ability to apply the same market abstraction and analysis to both on-premises and public cloud workloads, in real-time, enabling it to add value far beyond any cloud-specific or hypervisor-specific, point-in-time tools that may be available. Besides being multi-vendor, multi-cloud, and real-time by design, IWO does not force you to choose between performance assurance and cost/resource optimization.

Source: cisco.com