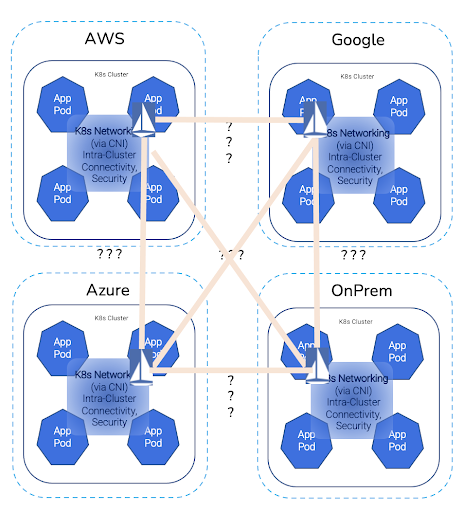

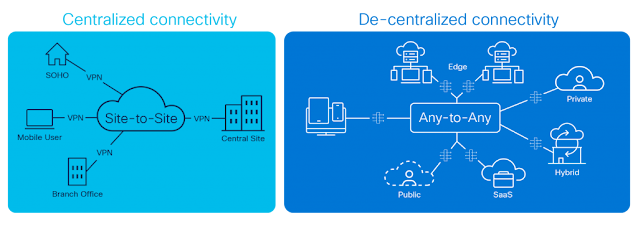

The digital transformation in this decade is demanding more from the network. Multi-cloud, edge, telework, 5G, and IoT are creating an evolved connectivity ecosystem characterized by highly distributed elements needing to communicate with one another in a complex, multi-domain, many-to-many fashion. The world of north-south, east-west traffic flows is quickly disappearing. The evolved connectivity demand is for more connections from more locations, to and from more applications, with tighter Service Level Agreements (SLAs) and involving many, many more endpoints.

Further, enterprises are moving data closer to the sources consuming it and are distributing their applications to drive optimized user experiences. All these new digital assets connect and interact across multiple clouds (private, hybrid, public, and edge).

• 70-80% of large enterprises are working toward executing a multi-cloud strategy

• The number of devices requiring communications will continue to grow

- IoT devices will account for 50% (14.7 billion) of all global networked devices by 2023

- Mobile subscribers will grow from 66% of the global population to 71% of the global population by 2023

• More applications and data requiring network connectivity in new places

- More than 50% of all workloads run outside the enterprise data center

- 90% of all applications support microservices architectures, enabling distributed deployments

• STL Partners’ forecast of the capacity of network edge computing estimates around 1,600 network edge data centers and 200,000 edge servers in 55 telco networks by 2025

Today’s service provider transport network finds itself on a collision course with this evolved connectivity ecosystem. The network is highly heterogeneous, spanning access, metro, WAN, and data center technologies. Stitching these silos together leads to an explosion of complexity and policy state in the network that exists simply to make the domains interoperate. The resulting architecture is burdened with a built-in complexity tax on operations, which hampers operator agility and innovation. As application and endpoint connectivity requirements become increasingly decentralized with their functionality and data deployed across multiple domains, the underlying network is proving too rigid to adapt quickly enough. The status quo has become a complex connectivity mélange with application experience entrusted to network overlays running over best-effort IP, and innovation moves out of the network domain.

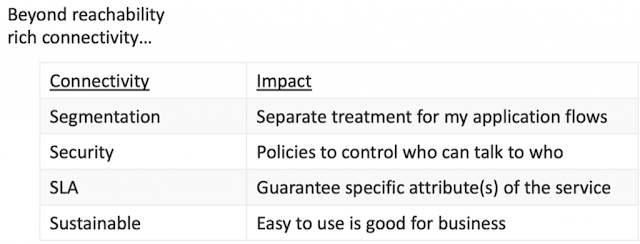

Our position: the network should operate like the cloud

As network providers, it’s time we started thinking like cloud providers. From the cloud provider’s perspective, their data centers are simply giant resource pools for their customers’ applications to dynamically consume to perform computing and storage work. Like the cloud, we should instead think of the network as a resource pool for on-demand connectivity services like segmentation, security, or SLA. This resource pool should be built on three key principles:

1. Minimize the capital and operational cost per forwarded Gb

2. Maximize the value the network provides per forwarded Gb (the value from the perspective of the application itself)

3. Eliminate friction or other barriers to applications consuming network services

The cloud operators simplify their resource pool as much as possible and ruthlessly standardize everything from data center facilities down through hardware, programmable interfaces, and infrastructure like hypervisors and container orchestration systems. All the simplification and standardization mean less cost to build, automate, and operate the infrastructure (Principle 1). More importantly, simplification means more resources to invest in innovation (Principle 2). The entire infrastructure can then be abstracted as a resource pool and presented as a catalog of services and APIs for customers’ applications to consume (Principle 3).

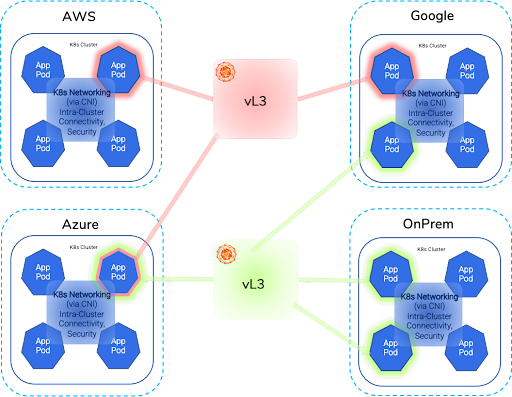

Our colleague Emerson Moura’s post later in this series focuses specifically on network simplification, however, we want to spend some time on the subject through the evolved connectivity and cloud provider lens. With connectivity spanning across domains, the most fundamental thing we can do is to standardize end-to-end on a common data plane to minimize the stitching points between edge, data center, cloud, and transport networks. We refer to this as the Unified Forwarding Paradigm (UFP).

A common forwarding architecture allows us to simplify elsewhere such as IPAM, DNS, and first-hop security. Consistent network connectivity means fewer moving parts for operations as all traffic transiting edge, data center, and cloud would follow common forwarding behaviors and be subject to common policies and tools for filtering and service chaining. And there’s a bonus in common telemetry metrics as well!

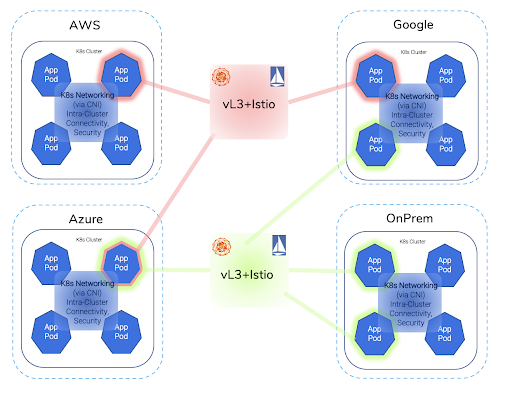

Our UFP recommendation is to adopt SRv6 wherever possible and ultimately IPv6 end-to-end. This common forwarding architecture provides a foundation for unified, service-aware forwarding across all network domains and includes familiar services like VPNs (EVPN, etc.) and traffic steering. More importantly, connectivity services may become software-defined. Moving to a UFP will lead to a massive reduction in friction and the network can make a true transition from configuration-centric to programmable, elastic, and on-demand. Imagine network connectivity services like pipes into the cloud or some edge environment moving to a demand-driven consumption model. Businesses no longer need to wait for operators to provision the network service. Operators would expose services via APIs for applications and users to consume in the same manner we consume VMs in the cloud: “I need an LSP/VPN to edge-zone X and I need it for two hours.” And as user and application behaviors change and require updates to the services they’re subscribed to, the change is executed via software and the network responds almost immediately.

The relationship between network overlay and underlay will also benefit from standardizing on

SRv6/IPv6 and

SDN. Today the overlay network is only as good as the underlay serving it. With a unified forwarding architecture and on-demand segment routing services, an SD-WAN system could directly access and consume underlay services for improved quality of experience. For flows that are latency-sensitive, the overlay network would subscribe to an underlay behavior that ensures traffic is delivered as fast as possible without delays. For the overlay networks, the SRv6 underlay that is SDN controlled provides a richer connectivity experience.