What Time Is It?

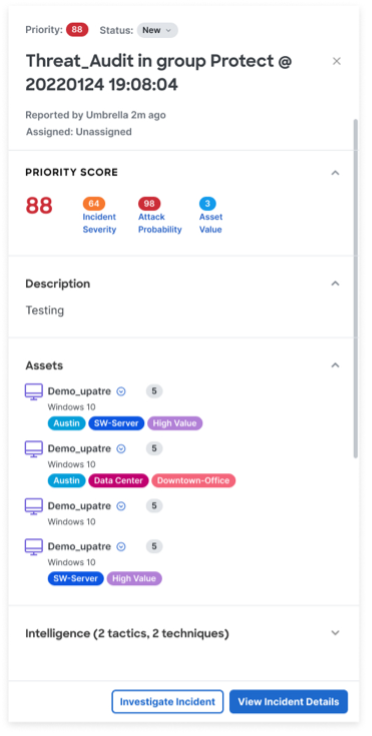

It’s been a minute since my last update on our network security strategy, but we have been busy building some awesome capabilities to enable true new-normal firewalling. As we release Secure Firewall 4200 Series appliances and Threat Defense 7.4 software, let me bring you up to speed on how Cisco Secure elevates to protect your users, networks, and applications like never before.

Secure Firewall leverages inference-based traffic classification and cooperation across the broader Cisco portfoliowhich continues to resonate with cybersecurity practitioners. The reality of hybrid work remains a challenge to the insertion of traditional network security controls between roaming users and multi-cloud applications. The lack of visibility and blocking from a 95% encrypted traffic profileis a painful problem that hits more and more organizations; a few lucky ones get in front of it before the damage is done. Both network and cybersecurity operations teams look to consolidate multiple point products, reduce noise, and do more with less; Cisco Secure Firewall and Workload portfolio masterfully navigates all aspects of network insertion and threat visibility.

Protection Begins with Connectivity

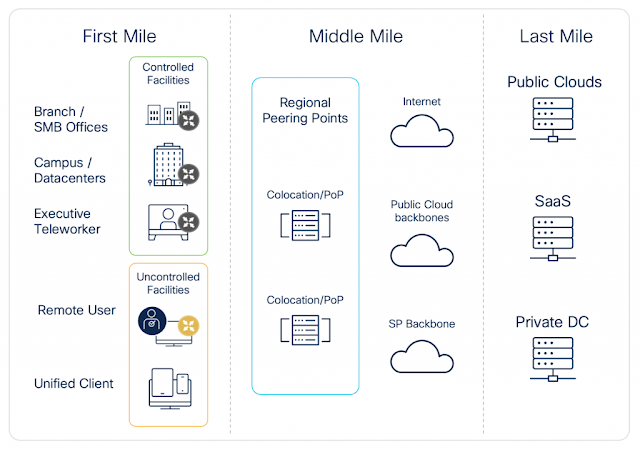

Even the most effective and efficient security solution is useless unless it can be easily inserted into an existing infrastructure. No organization would go through the trouble of redesigning a network just to insert a firewall at a critical traffic intersection. Security devices should natively speak the network’s language, including encapsulation methods and path resiliency. With hybrid work driving much more distributed networks, our Secure Firewall Threat Defense software followed by expanding the existing dynamic routing capabilities with application- and link quality-based path selection.

Application-based policy routing has been a challenge for the firewall industry for quite some time. While some vendors use their existing application identification mechanisms for this purpose, those require multiple packets in a flow to pass through the device before the classification can be made. Since most edge deployments use some form of NAT, switching an existing stateful connection to a different interface with a different NAT pool is impossible after the first packet. I always get a chuckle when reading those configuration guides that first tell you how to enable application-based routing and then promptly caution you against it due to NAT being used where NAT is usually used.

Our Threat Defense software takes a different approach, allowing common SaaS application traffic to be directed or load-balanced across specific interfaces even when NAT is used. In the spirit of leveraging the power of the broader Cisco Secure portfolio, we ported over a thousand cloud application identifiers from Umbrella,which are tracked by IP addresses and Fully Qualified Domain Name (FQDN) labels so the application-based routing decision can be made on the first packet. Continuous updates and inspection of transit Domain Name System (DNS) traffic ensures that the application identification remains accurate and relevant in any geography.

This application-based routing functionality can be combined with other powerful link selection capabilities to build highly flexible and resilient Software-Defined Wide Area Network (SD-WAN) infrastructures. Secure Firewall now supports routing decisions based on link jitter, round-trip time, packet loss, and even voice quality scores against a particular monitored remote application. It also enables traffic load-balancing with up to 8 equal-cost interfaces and administratively defined link succession order on failure to optimize costs. This allows a branch firewall to prioritize trusted WebEx application traffic directly to the Internet over a set of interfaces with the lowest packet loss. Another low-cost link can be used for social media applications, and internal application traffic is directed to the private data center over an encrypted Virtual Tunnel Interface (VTI) overlay. All these interconnections can be monitored in real-time with the new WAN Dashboard in Firewall Management Center.

Divide by Zero Trust

The obligatory inclusion of Zero Trust Network Access (ZTNA) into every vendor’s marketing collateral has become a pandemic of its own in the last few years. Some security vendors got so lost in their implementation that they had to add an internal version control system. Once you peel away the colorful wrapping paper, ZTNA is little more than per-application Virtual Private Network (VPN) tunnel with an aspiration for a simpler user experience. With hybrid work driving users and applications all over the place, a secure remote session to an internal payroll portal should be as simple as opening the browser – whether on or off the enterprise network. Often enough, the danger of carelessly implemented simplicity lies in compromising the security.

A few vendors extend ZTNA only to the initial application connection establishment phase. Once a user is multi-factor authenticated and authorized with their endpoint’s posture validated, full unimpeded access to the protected application is granted. This approach often results in shamingly successful breaches where valid user credentials are obtained to access a vulnerable application, pop it, and then laterally spread across the rest of the no-longer-secure infrastructure. Sufficiently motivated bad actors can go as far as obtaining a managed endpoint that goes along with those “borrowed” credentials. It’s not entirely uncommon for a disgruntled employee to use their legitimate access privileges for less than noble causes. The simple conclusion here is that the “authorize and forget” approach is mutually exclusive with the very notion of Zero Trust framework.

Secure Firewall Threat Defense 7.4 software introduces a native clientless ZTNA capability that subjects remote application sessions to the same continuous threat inspection as any other traffic. After all, this is what Zero Trust is all about. A granular Zero Trust Application Access (ZTAA – see what we did there?) policy defines individual or grouped applications and allows each one to use its own Intrusion Prevention System (IPS) and File policies. The inline user authentication and authorization capability interoperates with every web application and Security Assertion Markup Language (SAML) capable Identity Provider (IdP). Once a user is authenticated and authorized upon accessing a public FQDN for the protected internal application, the Threat Defense instance acts as a reverse proxy with full TLS decryption, stateful firewall, IPS, and malware inspection of the flow. On top of the security benefits, it eliminates the need to decrypt the traffic twice as one would when separating all versions of legacy ZTNA and inline inspection functions. This greatly improves the overall flow performance and the resulting user experience.

Let’s Decrypt

Speaking of traffic decryption, it is generally seen as a necessary evil in order to operate any DPI functions at the network layer – from IPS to Data Loss Prevention (DLP) to file analysis. With nearly all network traffic being encrypted, even the most efficient IPS solution will just waste processing cycles by looking at the outer TLS payload. Having acknowledged this simple fact, many organizations still choose to avoid decryption for two main reasons: fear of severe performance impact and potential for inadvertently breaking some critical communication. With some security vendors still not including TLS inspected throughput on their firewall data sheets, it is hard to blame those network operations teams who are cautious around enabling decryption.

Building on

the architectural innovation of Secure Firewall 3100 Series appliances, the newly released Secure Firewall 4200 Series firewalls kick the performance game up a notch. Just like their smaller cousins, the 4200 Series appliances employ custom-built inline Field Programmable Gateway Array (FPGA) components to accelerate critical stateful inspection and cryptography functions directly within the data plane. This industry-first inline crypto acceleration design eliminates the need for costly packet traversal across the system bus and frees up the main CPU complex for more sophisticated threat inspection tasks. These new appliances keep the compact single Rack Unit (RU) form factor and scale to over 1.5Tbps of threat inspected throughput with clustering. They will also provide up to 34 hardware-level isolated and fully functional FTD instances for critical multi-tenant environments.

Those network security administrators who look for an intuitive way of enabling TLS decryption will enjoy the completely redesigned TLS Decryption Policy configuration flow in Firewall Management Center. It separates the configuration process for inbound (an external user to a private application) and outbound (an internal user to a public application) decryption and guides the administrator through the necessary steps for each type. Advanced users will retain access to the full set of TLS connection controls, including non-compliant protocol version filtering and selective certificate blocklisting.

Not-so-Random Additional Screening

Applying decryption and DPI at scale is all fun and games, especially with hardware appliances that are purpose-built for encrypted traffic handling, but it is not always practical. The majority of SaaS applications use public key pinning or bi-directional certificate authentication to prevent man-in-the-middle decryption even by the most powerful of firewalls. No matter how fast the inline decryption engine may be, there is still a pronounced performance degradation from indiscriminately unwrapping all TLS traffic. With both operational costs and complexity in mind, most security practitioners would prefer to direct these precious processing resources toward flows that present the most risk.

Lucky for those who want to optimize security inspection, our industry-leading Snort 3 threat prevention engine includes the ability to detect applications and potentially malicious flows without having to decrypt any packets. The integral

Encrypted Visibility Engine (EVE) is the first in the industry implementation of Machine Learning (ML) driven flow inference for real-time protection within the data plane itself. We continuously train it with petabytes of real application traffic and tens of thousands of daily malware samples from our Secure Malware Analytics cloud. It produces unique application and malware fingerprints that Threat Defense software uses to classify flows by examining just a few outer fields of the TLS protocol handshake. EVE works especially well for identifying evasive applications such as anonymizer proxies; in many cases, we find it more effective than the traditional pattern-based application identification methods. With Secure Firewall Threat Defense 7.4 software, EVE adds the ability to automatically block connections that classify high on the malware confidence scale. In a future release, we will combine these capabilities to enable selective decryption and DPI of those high-risk flows for truly risk-based threat inspection.

The other trick for making our Snort 3 engine more precise lies in cooperation across the rest of the Cisco Secure portfolio. Very few cybersecurity practitioners out there like to manually sift through tens of thousands of IPS signatures to tailor an effective policy without blowing out the performance envelope. Cisco Recommendations from Talos has traditionally made this task much easier by enabling specific signatures based on actually observed host operating systems and applications in a particular environment. Unfortunately, there’s only so much that a network security device can discover by either passively listening to traffic or even actively poking those endpoints. Secure Workload 3.8 release supercharges this ability by continuously feeding actual vulnerability information for specific protected applications into Firewall Management Center. This allows Cisco Recommendations to create a much more targeted list of IPS signatures in a policy, thus avoiding guesswork, improving efficacy, and eliminating performance bottlenecks. Such an integration is a prime example of what Cisco Secure can achieve by augmenting network level visibility with application insights; this is not something that any other firewall solution can implement with DPI alone.

Light Fantastic Ahead

Secure Firewall 4200 Series appliances and Threat Defense 7.4 software are important milestones in our strategic journey, but it by no means stops there. We continue to actively invest in inference-based detection techniques and tighter product cooperation across the entire Cisco Secure portfolio to bring value to our customers by solving their real network security problems more efficiently. As you may have

heard from me at the recent Nvidia GTC event, we are actively developing hardware acceleration capabilities to combine inference and DPI approaches in hybrid cloud environments with Data Processing Unit (DPU) technology. We continue to invest in endpoint integration both on the application side with Secure Workload and the user side with Secure Client to leverage flow metadata in policy decisions and deliver a truly hybrid ZTNA experience with Cisco Secure Access. Last but not least, we are redefining the fragmented approach to public cloud security with Cisco Multi-Cloud Defense.

The light of network security continues to shine bright, and we appreciate you for the opportunity to build the future of Cisco Secure together.

Source: cisco.com