I’m about to let you in on a little secret, actually it’s a big secret. What if I told you that you could save millions – even tens of millions – of dollars in two hours or less? If you were the CFO of a Mobile Operator, would this get your attention? What if I told you that the larger and busier your mobile network is, the more money you could save? What if the savings were in the hundreds of millions or even billions of dollars?

Now that I have your attention, let me tell you a bit about the Cisco Ultra Traffic Optimization (CUTO) solution. I could tell you that this is a vendor-agnostic solution for both the RAN and the mobile packet core. I could tell you how CUTO uses machine learning algorithms or about proactive cross-traffic contention detection. I could tell you about elephant flows and how the CUTO software optimizes the packet scheduler in RAN networks. I could write a whole blog on the CUTO technology and how it works, but I won’t. I will leave out the technical details for another time to share.

What I want to share today are two important facts about our CUTO solution:

1) We have helped multiple operators install and turn on CUTO, networkwide in less than 2 hours.

2) Real world deployments are demonstrating material savings, including a recent trial with a large Tier 1 operator that resulted in a calculated savings of several billions of dollars.

Out of all the segments of the network that operators are investing in, spectrum and RAN tend to be a top priority, both from a CAPEX and an OPEX standpoint. This is because mobile network operators have thousand’s, if not tens of thousands, of cell towers with accompanying RAN equipment. The amount of equipment required, and the cost of a truck roll per site leads to enormous expenses when you want to upgrade or augment the RAN network. With network traffic growing faster every year, the challenge to stay ahead of demand also grows. Major RAN network augmentations can take months, if not years to complete, especially when you factor in governmental regulations and permitting processes. This is where one aspect of the CUTO solution stands out, its ease and speed of deployment. CUTO’s purpose is to optimize the RAN network and improve the efficiency of the use of the spectrum. Instead of sending an army of service trucks and technicians to each and every cell tower, CUTO is deployed in the Core of the network, which is a very small number of sites (data centers). Making things even easier, CUTO can be deployed on Common Off the Shelf Servers (COTS), or better yet it can be deployed on the existing Network Function Virtual Infrastructure (NFVI) stack already in the mobile network core. Installing and deploying CUTO is as easy as spinning up a few virtual machines. In less than 2 hours, real operators on live networks have managed to install and deploy CUTO networkwide. Service providers talk a lot about MTTD (mean time to detect an issue) and MTTR (mean time to repair it), but with CUTO they can talk about MTTME – mean time to millions earned (saved).

If you look at the present mode of operation (PMO) for most mobile operators, there’s a very typical workflow that occurs in RAN networks. Customer’s consumption of video and an insatiable appetite for bandwidth eventually leads to a capacity trigger in the network, alerting the mobile network operator that they have congestion in a cell site. To handle the alert, operators typically have three options:

1) They might be able to “re-farm” spectrum and transition 3G spectrum to 4G spectrum. If this is an option, it typically leads to about a 40% spectrum gain at the price tag of about 22K in CAPEX per site and with very nominal OPEX costs. This option is relatively quick, simple, and leads to a good capacity improvement.

2) They might be able to deploy a new spectrum band or increase the Antenna Sectorization density. This option leads to about 20%-30% of the capacity gain but comes at a price tag of about 80K in CAPEX per site and about 20K in OPEX. This is a relatively long and costly process for a marginal capacity improvement and finding high quality “beachfront” spectrum is impossible in many markets around the world.

3) If neither option 1 or 2 are possible, the Operator would need to build a new cell site and cell split (tighten reuse). A new site leads to about 80% capacity gain depending on the site’s placement, user distribution, terrain, shadowing, etc., and comes at a cost of roughly 250K in CAPEX and about 65K/year in OPEX. This is an extremely long process (permitting, etc.) and very expensive.

Unfortunately, the spectrum is a finite resource, the opportunity for operators to choose option 1 or 2 is becoming scarce. Just five years ago, about 50% of cell sites with congestion were candidates for option 1, 30% are candidates for option 2, and only 20% required option 3. Five years from now virtually no cell sites will be candidates for option 1, maybe 10% will be candidates for option 2, and over 90% will require the very time-consuming and costly option 3.

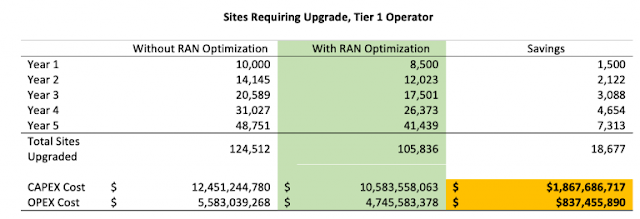

CUTO offers mobile operators an alternative, helping to optimize traffic and reduce the congestion in the cell sites, which can significantly reduce the number of sites requiring capacity upgrades. During a recent trial to measure the efficacy of CUTO in the real-world and at scale, we deployed it with a Tier 1 operator. Near immediately, we saw a 15% reduction in the number of cell sites triggering a capacity upgrade due to consistent congestion. Here are some real-world numbers of how we were able to calculate the savings based on that 15% reduction:

The operator in this use case has:

• ~40M subscribers

• 10,000 sites triggering a need for a capacity upgrade

• Annual data traffic growth rate of 25%

The assumptions we agreed to with the operator were:

• Blended Incremental CAPEX/Upgrade (options 1, 2, and 3) = $100K CAPEX

• Blended Incremental OPEX/Site/Year (options 1, 2, and 3) = $20K OPEX/year