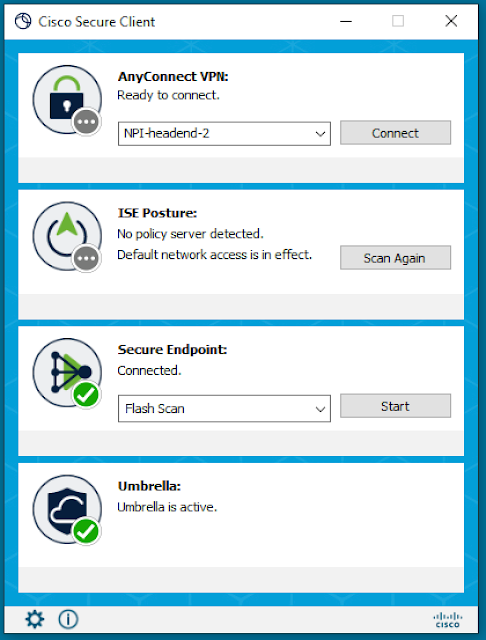

We’re excited to announce Cisco Secure Client, formerly AnyConnect, as the new version of one of the most widely deployed security agents. As the unified security agent for Cisco Secure, it addresses common operational use cases applicable to Cisco Secure endpoint agents. Those who install Secure Client’s next-generation software will benefit from a shared user interface for tighter and simplified management of Cisco agents for endpoint security.

Sunday, 31 July 2022

More than a VPN: Announcing Cisco Secure Client (formerly AnyConnect)

Thursday, 28 July 2022

Your Network, Your Way: A Journey to Full Cloud Management of Cisco Catalyst Products

At Cisco Live 2022 in Las Vegas, Nevada (June 12-16), there were many announcements about our newest innovations to power the new era of hybrid workspace, distributed network environments and the customers journey to the cloud. Among the revelations was our strategy to accelerate our customers transition to a cloud-managed networking experience.

Our customers asked, and we answered: Cisco announced that Catalyst customers can choose the operational model that best fits their needs: Cloud Management/Monitoring through the Meraki Dashboard or On-Prem/Public/Private Cloud with Cisco DNA Center.

So WHY THIS and WHY NOW?

And HOW to get started?

Executing a Cloud Ready Strategy

License Flexibility

Customer Use Cases

Why this is important?

Tuesday, 26 July 2022

Perspectives on the Future of Service Provider Networking: Distributed Data Centers and Edge Services

The ongoing global pandemic, now approaching its third year, has profoundly illustrated the critical role of the internet in society, changing the way we work, live, play, and learn. This role will continue to expand as digital transformation becomes even more pervasive. However, connecting more users, devices, applications, content, and data with one another is only one dimension to this expansion.

Another is the new and emerging types of digital experiences such as cloud gaming, augmented reality/virtual reality (AR/VR), telesurgery using robotic assistance, autonomous vehicles, intelligent kiosks, and Internet of Things (IoT)-based smart cities/communities/homes. These emerging digital experiences are more interactive, bandwidth-hungry, latency-sensitive, and they generate massive amounts of data useful for valuable analytics. Hence, the performance of public and private networks will be progressively important for delivering superior digital experiences.

Network performance, however, is increasingly dependent on the complex internet topology that’s evolving from a network of networks to a network of data centers. Data centers are generally where applications, content, and data are hosted as workloads using compute, storage, and networking infrastructure. Data centers may be deployed on private premises, at colocation facilities, in the public cloud, or in a virtual private cloud and each may connect to the public internet, a private network, or both. Regardless, service providers, including but not limited to communication service providers (CSPs) that provide network connectivity services, carrier neutral providers that offer colocation/data center services, cloud providers that deliver cloud services, content providers that supply content distribution services, and software-as-a-service (SaaS) application providers all play a vital role in both digital experiences and network performance. However, each service provider can only control the performance of its own network and associated on-net infrastructure and not anything outside of its network infrastructure (i.e., off-net). For this reason, cloud providers offer dedicated network interconnects so their customers can bypass the internet and receive superior network performance for cloud services.

New and emerging digital experiences depend on proximity

In the past, service providers commonly deployed a relatively small number of large data centers and network interconnects at centralized locations. In other words, that’s one large-scale data center (with optional redundant infrastructure) per geographic region where all applicable traffic within the region would backhaul to. New and emerging digital experiences, however, as referenced above, are stressing these centralized data center and interconnect architectures given their much tighter performance requirements. At the most fundamental level, the speed of light determines how quickly traffic can traverse a network while computational power defines how fast applications and associated data can be processed. Therefore, proximity of data center workloads to users and devices where the data is generated and/or consumed is a gating factor for high quality service delivery of these emerging digital experiences.

Consider the following:

◉ High bandwidth video content such as high-definition video on demand, streaming video, and cloud-based gaming. Caching such content closer to the user not only improves network efficiency (i.e., less backhaul), but it also provides a superior digital experience given lower network latency and higher bandwidth transfer rates.

◉ Emerging AR/VR applications represent new revenue opportunities for service providers and the industry. However, they depend on ultra-low network latency and must be hosted close to the users and devices.

◉ Private 5G services including massive IoT also represent a significant new revenue opportunity for CSPs. Given the massive logical network scale and massive volume of sensor data anticipated, data center workload proximity will be required to deliver ultra-reliable low-latency communications (URLCC) and massive machine-type communications (mMTC) services as well as host 5G user plane functions so that local devices can communicate directly with one another at low latency and using high bandwidth transfer rates. Proximity also improves network efficiency by reducing backhaul traffic. That is, proximity enables the bulk of sensor data to be processed locally while only the sensor data that may be needed later is backhauled.

◉ 5G coordinated multipoint technologies can also provide advanced radio service performance in 5G and LTE-A deployments. This requires radio control functions to be deployed in proximity to the remote radio heads.

◉ Developing data localization and data residency laws are another potential driver for data center proximity to ensure user data remains in the applicable home country.

These are just a few examples that illustrate the increasing importance of proximity between applications, content, and data hosted in data centers with users/devices. They also illustrate how the delivery of new and emerging digital experiences will be dependent on the highest levels of network performance. Therefore, to satisfy these emerging network requirements and deliver superior digital experiences to customers, service providers should transform their data center and interconnect architectures from a centralized model to a highly distributed model (i.e., edge compute/edge cloud) where data center infrastructure and interconnects are deployed at all layers of the service provider network (e.g., local access, regional, national, global) and with close proximity to users/devices where the data is generated and/or consumed.

This transformation should also include the ubiquitous use of a programmable network that allows the service provider to intelligently place workloads across its distributed data center infrastructure as well as intelligently route traffic based upon service/application needs (e.g., to/from the optimal data center), a technique we refer to as intent-based networking. Further, in addition to being highly distributed, edge data centers should be heterogeneous and not one specific form factor. Rather, different categories of edge data centers should exist and be optimized for different types of services and use cases.

Four categories of edge data centers

Cisco, for example, identifies four main categories of edge data centers for edge compute services:

1. Secure access service edge (SASE) for hosting distributed workloads related to connecting and securing users and devices. For example, secure gateways, DNS, cloud firewalls, VPN, data loss prevention, Zero Trust, cloud access security broker, cloud onramp, SD-WAN, etc.

2. Application edge for hosting distributed workloads related to protecting and accelerating applications and data. For example, runtime application self-protection, web application firewalls, BoT detection, caching, content optimization, load balancing, etc.

3. Enterprise edge for hosting distributed workloads related to infrastructure platforms optimized for distributed applications and data. For example, voice/video, data center as a service (DCaaS), industrial IoT, consumer IoT, AI/ML, AR/VR, etc.

4. Carrier edge for hosting distributed workloads related to CSP edge assets (e.g., O-RAN) and services including connected cars, private LTE, 5G, localization, content and media delivery, enterprise services, etc.

Of course, applicability of these different categories of edge compute services will vary per service provider based on the specific types of services and use cases each intends to offer. Carriers/CSPs, for example, are in a unique position because they own the physical edge of the network and are on the path between the clouds, colocation/data centers, and users/devices. Of course, cloud providers and content providers are also in a unique position to bring high performance edge compute and storage closer to users/devices whether via expanding their locations and/or hosting directly on the customer’s premises. Similarly, carrier neutral providers (e.g., co-location/data centers) are also in a unique position given their dense interconnection of CSPs, cloud providers, content providers, and SaaS application providers.

Benefits of distributed data centers and edge services

Saturday, 23 July 2022

Why Manufacturers duplicate IPv4 addresses and how IE switches help solve the issues

If this topic piqued your interest, you’re probably impacted by or at least curious about duplicate IP Addresses in your industrial network. You are not alone. It can be a little bewildering. There doesn’t seem to be any reason in this day and age to have duplicate IP Addresses, let alone do it on purpose. Let’s unravel the mystery.

Companies that build sophisticated machines have made the transition to Internet Protocol as the communication protocol within their machines. IPv4 is the easiest protocol to use. There are lots of software libraries in the ether based on IPv4. These companies’ core competency is the electrical and mechanical aspect of their machines, not the software that runs the machine and therefore they do not have sophisticated software teams. When you’re writing communication software and software is not your core competency, what is the easiest and least problematic way to identify the components within your machine? Answer: Static IP Addresses. The alternative to static IP Addresses is a more complicated process involving dynamic IP Address assignment, along with a complex task of identifying which IP Address the individual components received.

The IP Addresses were duplicated on purpose. The software in the machine uses static IP Addresses to identify individual machine components because it’s easier for the machine builders. Each machine they build has the same software (SW). Therefore, they use the same static IP Addresses. If you have purchased two or more of their machines, then you have duplicate IP Addresses. To be fair, it would be much harder and cost prohibitive to give each component of each machine a unique IP Address.

Why is this a problem?

Solutions

Sample IOS CLI configuration for the Cisco Industrial Ethernet

Thursday, 21 July 2022

Enhancing Government Outcomes with Integrated Private 5G

Enhancing Government Outcomes with Integrated Private 5G

Private 5G is now ready to be part of your enterprise wireless communications transformation strategy. While there has been extensive focus on ultra-wideband gigabit speeds from public Mobile Network Operators, there are even greater government expectations for 5G capabilities to assure the quality of service and empower new mission-critical use cases. 3GPP standards are enabling delivery of capabilities in three strategic 5G areas: enhanced Mobile Broadband (eMBB), Ultra-Reliable and Low Latency Communications (URLLC), and massive Machine Type Communications (mMTC). Private 5G is uniquely capable of addressing critical communications requiring interference-free spectrum, high throughput and/or low latency deterministic data delivery, and the ability to transfer terabytes of data without a metered service plan. The result will be a wide range of advanced public and private network wireless capabilities for high-definition video, advanced command and control, autonomous vehicles, and addressing previously overwhelming quantities of sensor data.

Private 5G Fundamentals

Cisco’s Private 5G solution is built on service provider class technology, tailored and optimized for enterprise consumption. For decades, Cisco has powered cellular networks around the world through advanced IP transport and 3GPP standards-based components, including our industry-leading Mobile Packet Core. Our new Private 5G solution delivers Wi-Fi-like simplicity through a cloud-native platform built on a services-based architecture and micro-services infrastructure. The solution offers a zero-touch delivery approach to on-premises elements that provide wireless connectivity between user devices and applications, while ensuring organizational and local data sovereignty. Cisco’s proven IoT platform manages the on-premises elements allowing for rapid turn-up and delivery of services, reducing government 5G learning curves and on-boarding burdens.

Better Together – An Enterprise Wireless Approach

An integrated private wireless strategy for Private 5G and Wi-Fi6 working together can deliver near-term transformative innovation as well as optimal user experiences and new mission-critical capabilities for the next generation of government mobility.

Better Together Outcomes – Optimized Experience / Minimized Costs

Key Zero Trust and Security Considerations

Tuesday, 19 July 2022

Security Resilience in APJC

As the world continues to face formidable challenges, one of the many things impacted is cybersecurity. While recent challenges have been varied, they have all contributed to great uncertainty. How can organizations stay strong and protect their environments amidst so much volatility?

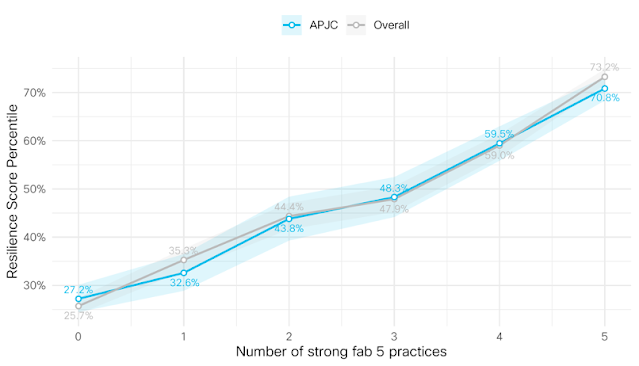

Lately we’ve been talking a lot about security resilience, and how companies can embrace it to stay the course no matter what happens. By building a resilient security strategy, organizations can more effectively address unexpected disruptions and emerge stronger.

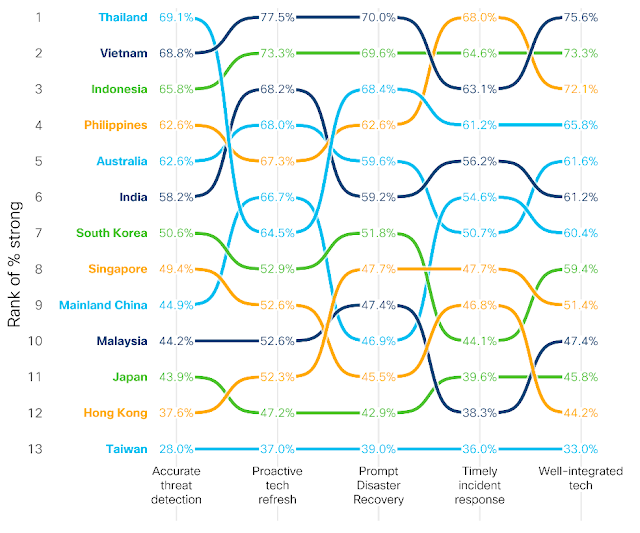

Through our Security Outcomes Study, Volume 2, we were able to benchmark how companies around the world are doing when it comes to cyber resilience. Recent blog posts have taken a look at security resilience in the EMEA and Americas regions, and this post assesses resilience in Asia Pacific, Japan and China (APJC).

While the Security Outcomes Study focuses on a dozen outcomes that contribute to overall security program success, for this analysis, we focused on four specific outcomes that are most critical for security resilience. These include: keeping up with the demands of the business, avoiding major cyber incidents, maintaining business continuity, and retaining talented personnel.

Security performance across the region

The following chart shows the proportion of organizations in each market within APJC that reported “excelling” in these four outcomes:

Improving resilience in APJC

Boost your organization’s cyber resilience

Saturday, 16 July 2022

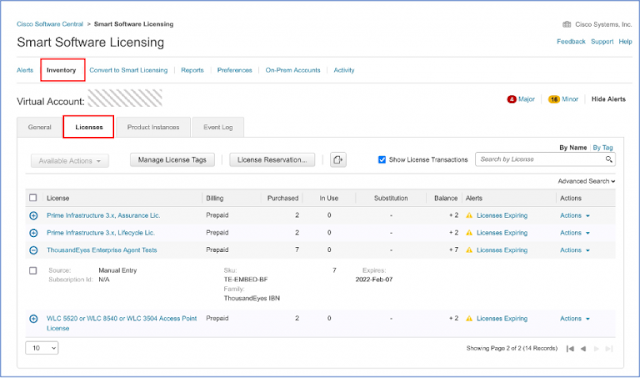

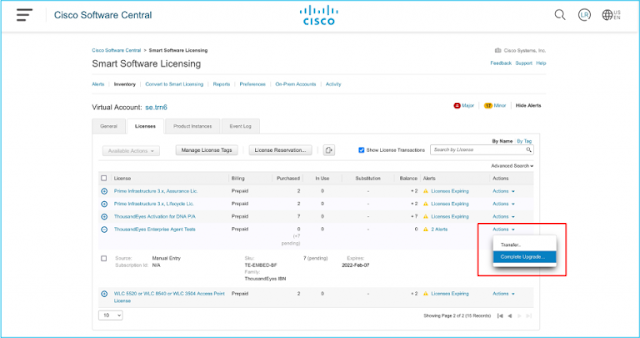

6 Steps to Unlocking ThousandEyes for Catalyst 9000

Modern businesses rely on network connectivity, including across the Internet and public cloud. The more secure, stable, and reliable these networks are, the better the user experience is likely to be. Understanding WAN performance, including Internet transit networking and how it affects application delivery, is key to optimizing your network architecture and solving business-impacting issues.

Troubleshooting any technical issue in environments so distributed and fast-changing can be a difficult and tedious process. First, there is the scope of what the problem could be. Is it a configuration error? An application issue? Did someone forget to change a DNS entry? Without knowing what domain the problem resides in, it is hard to approach troubleshooting effectively.

To help enterprises meet the needs and requirements of their expanded enterprise networks, new and existing Catalyst 9300 and 9400 switches customers have a powerful entitlement in their toolkit: ThousandEyes Enterprise Agents. ThousandEyes runs on many platforms, but there are several advantages to running ThousandEyes tests from Catalyst 9000 switches.

Installing it is easy, and you can use your existing resources to monitor connectivity and digital experience as close to the end-user as possible.